Why is standard deviation better measurement for risk?

In finance, the standard deviation is applied to the annual rate of return of an investment to measure the investment’s volatility. Standard deviation is also known as historical volatility and is used by investors as a measure for the amount of expected volatility. Basically, the standard deviation is used to see whether the project has less or high risk. So standard deviation is better measurement.

Standard Deviation is Better Measurement

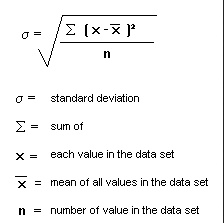

A measure of the variation in a distribution, equal to the square root of the arithmetic mean of the squares of the deviations from the arithmetic mean, the square root of the variance.

The reasons behind standard deviation as a better measurement for risk are given below

The most commonly used measure of risk for assets or securities is a measure known as the standard deviation. The larger the standard deviation the greater the dispersion and hence the greater the distribution’s stand-alone risk. On the other hand the lower the standard deviation the lower the risky-ness of the project.

If you want to know how `risky’ a fund/ a project is, there are other ways of assessing it. For instance, you can compare the annual returns of a fund over the past several years. You can analyze how the fund has done in bull markets and in bear markets. Or you can compare compound returns for several time periods. Using compound returns has one problem though. Compound returns can be affected by one year’s exceptional performance. To correct for this, Fund Counsel suggests dropping the exceptional year and re-computing the compound rate of return.

But from above-mentioned measures standard deviation is considered as a better measurement of risk because by using standard deviation we can easily identify whether the project is risky or not. If the rate of standard deviation is high then the project is risky and if the rate of standard deviation is low then the project is less risky.